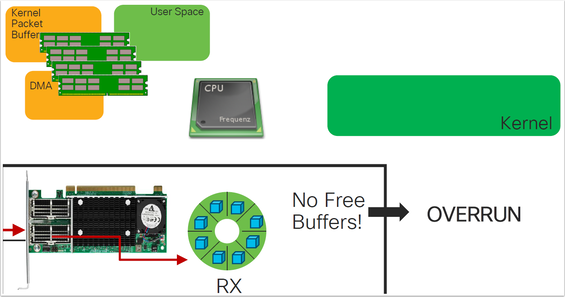

Just like a physical network switch or router, a virtual machines vNIC must have buffers to temporarily store incoming network frames for processing. During periods of very heavy load, the guest may not have the cycles to handle all the incoming frames and the buffer is used to temporarily queue up these frames. If the buffer fills more quickly than it is emptied, or processed further, the vNIC driver has no choice but to drop additional incoming frames. This is referred to as a full buffer or ring exhaustion.

Ok I said moving packets, but technically I guess it's moving network frames right? Or should we go with bits?

Anyway, there are a number of VMware KB articles regarding issues with dropped packets and performance issues in any virtual guest OS. KB2039495 and KB50121760 are just a few. These dropped packets/out of buffers issues were also prevalent on NSX-V and NSX-T edges, since their vNics need to process the frames(?) in the same manner.

I have troubleshot many issues on NSX-V edges that had high packet loss and out of buffers. One problem was that prior to NSX-V version 6.4.6, the vNic rx buffer size was set at 512, even if you increased the size of the edge!

And I have also seen this behavior on NSX-T edges, especially a T-0 edge, with version below 3.1.

Since the KB articles, and several other posts go into the checking and modifying the RX buffer size on the NSX edge and a guest vm, I won't go into all the details, but here are the general steps and commands that I have used to check for any RX buffer issues on an NSX edge or vm:

First, ssh to the ESXi host that the edge or vm is running on:

[root@esx4:~] net-stats -l

Sample output:

PortNum Type SubType SwitchName MACAddress ClientName

33554539 5 9 DvsPortset-0 00:50:56:b5:60:9e Edge01-1.eth0

Next retrieve the switch statistics for a specific port, enter- esxcli network port stats get -p 33554539

[root@esx4:~] esxcli network port stats get -p 33554539

Sample output:

Packet statistics for port 33554539

Packets received: 100120460052

Packets sent: 48907954505

Bytes received: 64575706925507

Bytes sent: 9407670139350

Broadcast packets received: 789

Broadcast packets sent: 50

Multicast packets received: 0

Multicast packets sent: 4

Unicast packets received: 100120459263

Unicast packets sent: 48907954451

Receive packets dropped: 2326648

Transmit packets dropped: 0

Ouch! So yes there are Receive packets dropped there. I then run vsish command for a specified port number to list the rx statistics against that port-

[root@esx4:~] vsish -e get /net/portsets/DvsPortset-0/ports/33554539/vmxnet3/rxSummary

Sample output abbreviated:

stats of a vmxnet3 vNIC rx queue {

LRO pkts rx ok:0

LRO bytes rx ok:0

pkts rx ok:100341287995

bytes rx ok:98187846706473

unicast pkts rx ok:100341286995

unicast bytes rx ok:98187846646473

multicast pkts rx ok:0

multicast bytes rx ok:0

broadcast pkts rx ok:1000

broadcast bytes rx ok:60000

running out of buffers:2368132

pkts receive error:0

1st ring size:512

2nd ring size:128

# of times the 1st ring is full:2326634

# of times the 2nd ring is full:0

fail to map a rx buffer:47

request to page in a buffer:47

From the above, we see that the running out of buffers is high, and this also shows the setting 1st ring size:512

which is the limiting issue, so this can be increased to 4096 on the NSX edges, and most guest OS vm's.

These VMware KB2039495 and KB1010071 lists the steps to modify the RX buffer values on a Windows and Linux vm's.

The latest NSX versions have addressed the RX buffer issues with the edge sizing, so this issue should be reduced, however this can be an issue on any virtual OS or appliance handling high network traffic, as I will try to show on the next post...

RSS Feed

RSS Feed