So what is vTPM?? Well I asked myself that when our environments needed to support Windows 11 vm's. We also needed to provide for encrypting the vm files as well.

vTPM stands for Virtual Trusted Platform Module. A TPM is a hardware chip in the server that stores hardcoded cryptographic keys that make it impossible for a hacker to modify. This hardware security device is a new baseline for security moving forward and may be required for all Microsoft OS’s, and others, in the near future.

Check out the Microsoft Windows 11 requirements here.

Starting in vSphere 6.5, the feature for VM Encryption was added, to add the ability to encrypt all virtual machine files. This not only encrypted the vm files and VMDK, but all the metadata files and the core dump files of the vm. Core dump files? More on that later..

Then in vSphere 6.7 support for the TPM 2.0 cryptoprocessor was added. This provided the ability to create a Virtual Trusted Platform Module (vTPM) device that can be added to a Windows 10, windows 11 or Windows Server 2016 and higher vm. Here's one VMware doc with more details.

Note that vTPM uses the *.nvram file to store the credentials and keys, which is encrypted using virtual machine encryption. So when backing up a vm with vTPM enabled, be sure to include the *.nvram file!

vTPM stands for Virtual Trusted Platform Module. A TPM is a hardware chip in the server that stores hardcoded cryptographic keys that make it impossible for a hacker to modify. This hardware security device is a new baseline for security moving forward and may be required for all Microsoft OS’s, and others, in the near future.

Check out the Microsoft Windows 11 requirements here.

Starting in vSphere 6.5, the feature for VM Encryption was added, to add the ability to encrypt all virtual machine files. This not only encrypted the vm files and VMDK, but all the metadata files and the core dump files of the vm. Core dump files? More on that later..

Then in vSphere 6.7 support for the TPM 2.0 cryptoprocessor was added. This provided the ability to create a Virtual Trusted Platform Module (vTPM) device that can be added to a Windows 10, windows 11 or Windows Server 2016 and higher vm. Here's one VMware doc with more details.

Note that vTPM uses the *.nvram file to store the credentials and keys, which is encrypted using virtual machine encryption. So when backing up a vm with vTPM enabled, be sure to include the *.nvram file!

Enabling vTPM in vSphere

Now you have your brand new Windows 11 files or ISO (I don't need to know where you got it from), and you want to create a new vm with it.

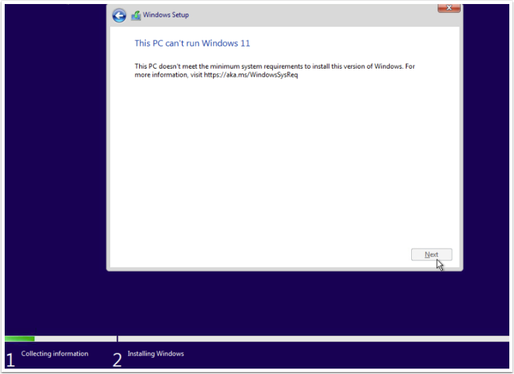

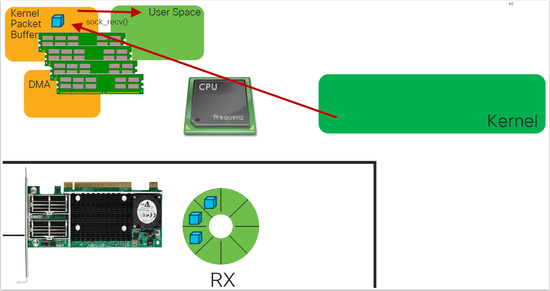

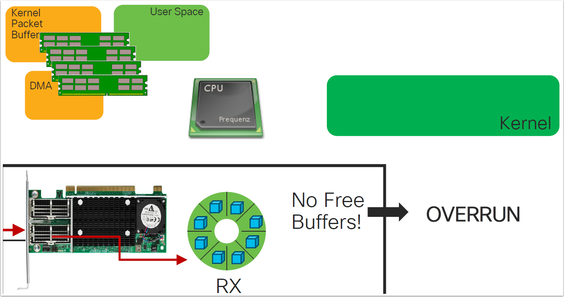

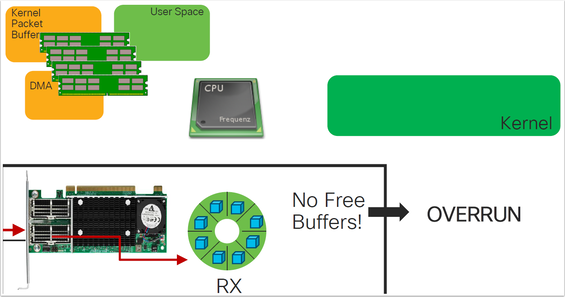

If you tried to create a new Windows 11 vm before setting up your environment to support vTPM you will get this awful setup error:

If you tried to create a new Windows 11 vm before setting up your environment to support vTPM you will get this awful setup error:

There are several VMware articles to step you through enabling vTPM support, so I will outline the high level steps I followed.

To use a vTPM, your vSphere environment must meet these requirements:

Virtual machine requirements:

EFI firmware

Hardware version 14 or later

vSphere component requirements:

vCenter Server 6.7 or later for Windows virtual machines.

Virtual machine encryption (to encrypt the virtual machine home files).

Key provider configured for vCenter Server. See Set up a Key Management Server Cluster.

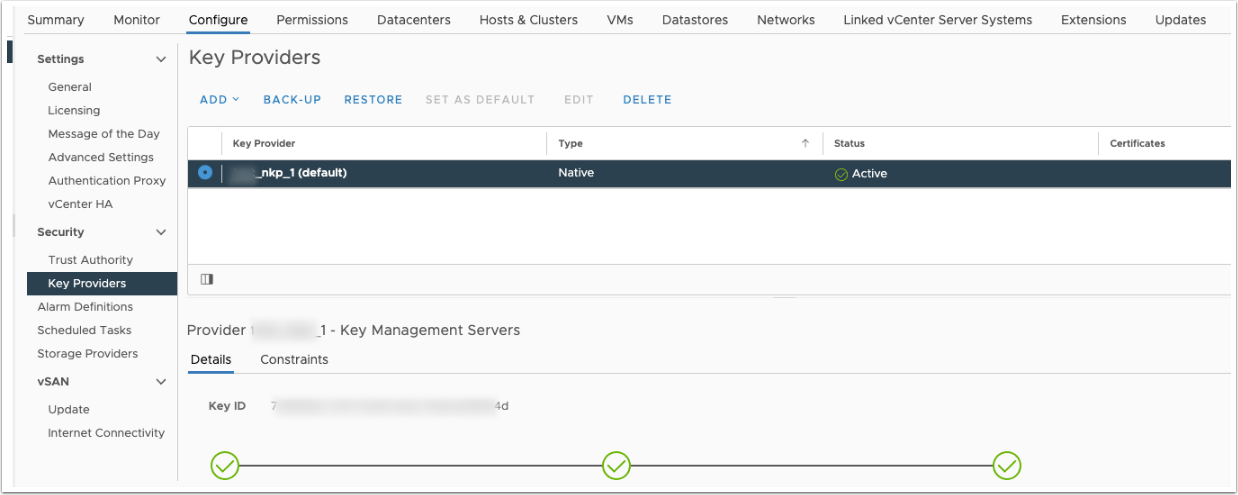

Below I installed a Native Key Provider onto my vCenter:

To use a vTPM, your vSphere environment must meet these requirements:

Virtual machine requirements:

EFI firmware

Hardware version 14 or later

vSphere component requirements:

vCenter Server 6.7 or later for Windows virtual machines.

Virtual machine encryption (to encrypt the virtual machine home files).

Key provider configured for vCenter Server. See Set up a Key Management Server Cluster.

Below I installed a Native Key Provider onto my vCenter:

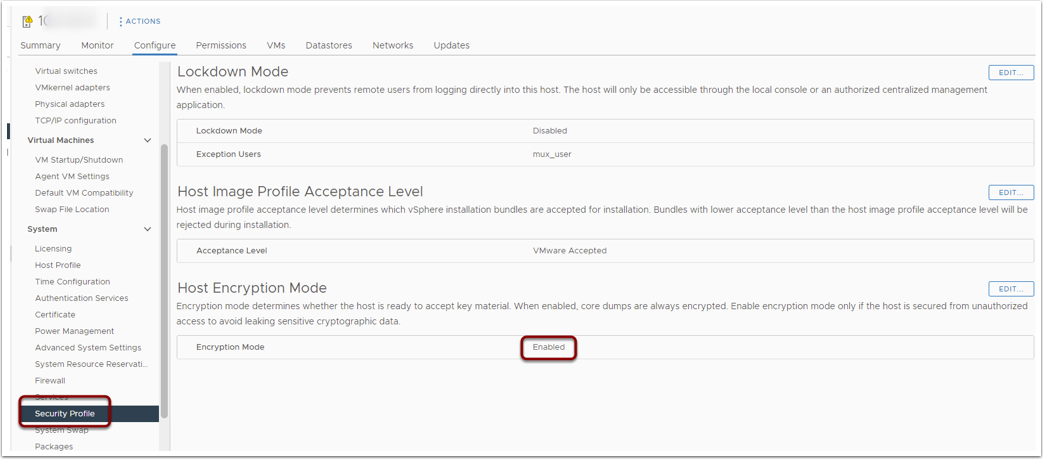

This will also enable Host Encryption Mode on you ESXi servers:

Now that we have the Key Provider configured on our vCenter, and confirmed the ESXi hosts are in Encryption Mode, we can now add the vTPM onto the Windows 11 vm.

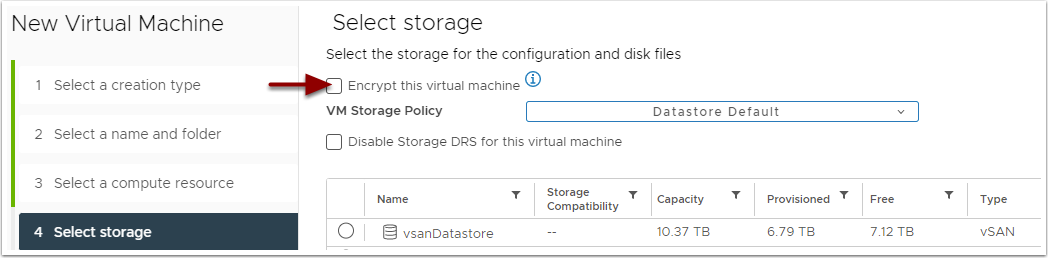

When creating a new virtual machine, there is now the option to Encrypt this virtual machine under Select Storage:

When creating a new virtual machine, there is now the option to Encrypt this virtual machine under Select Storage:

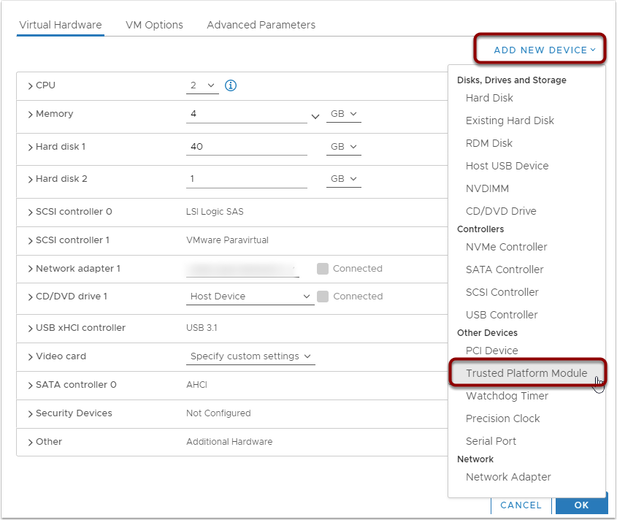

Next on the Virtual Hardware tab, you can select Add New Device and select the Trusted Platform Module:

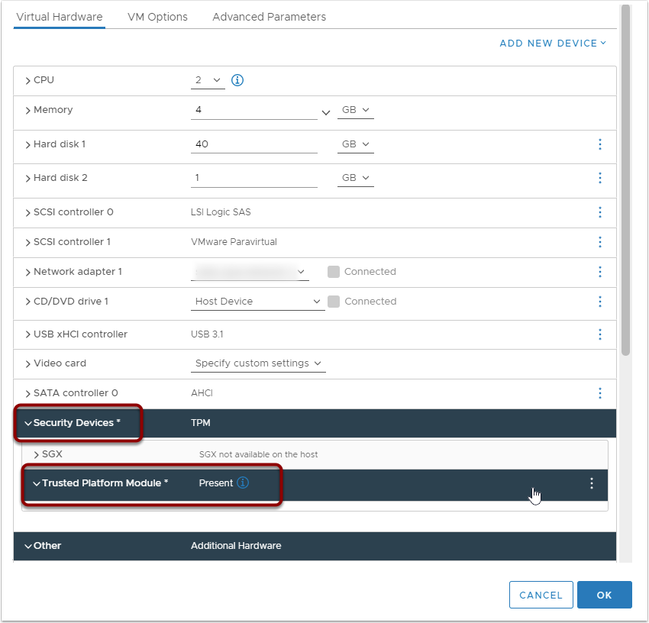

The Trusted Platform Module now shows added to the vm:

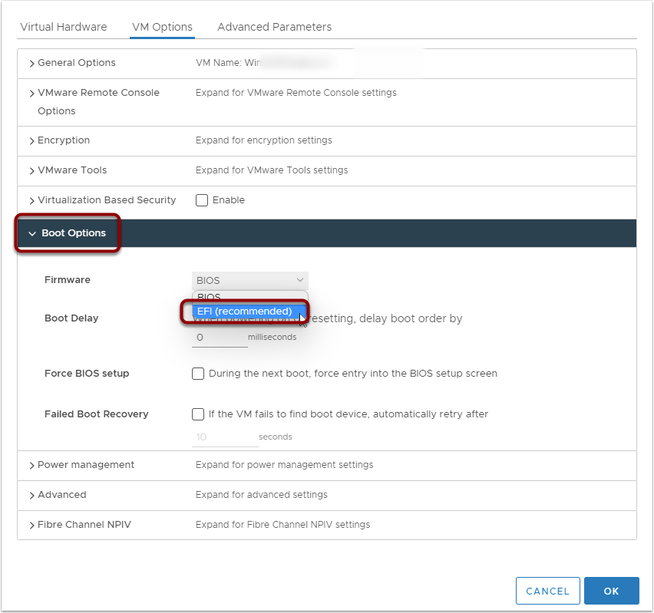

Now, on the VM Options tab, set the Boot Options to EFI:

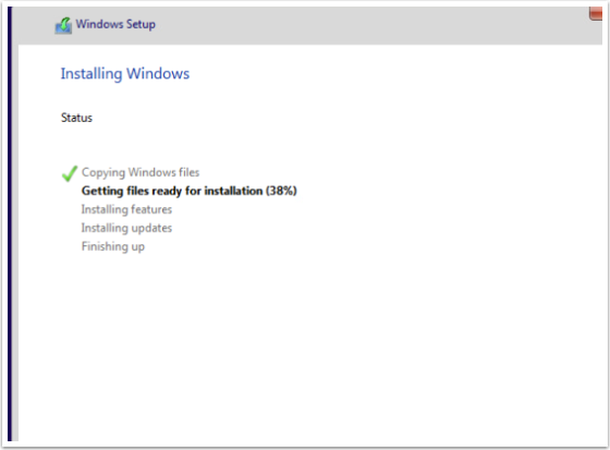

And viola! Our Windows 11 vm will now run the install!!

So once you have this all set up, you can now deploy Windows 11 and the new Windows Server versions.

Ah about the encrypted core dumps? I will have more details on core dumps on my next post

on vTPM Support on vSphere Part 2

Ah about the encrypted core dumps? I will have more details on core dumps on my next post

on vTPM Support on vSphere Part 2

RSS Feed

RSS Feed